Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Sign Language Recognition

Authors: Divyanshu Pal , Asst. Professor Rohini Sharma, Dheeraj , Abdul Bazid, Gaurav Chandel

DOI Link: https://doi.org/10.22214/ijraset.2024.63056

Certificate: View Certificate

Abstract

Communication through signs has consistently been a significant way for communication among hearing and speech impaired humans, generally called deaf and dumb. It is the only mode of communicating for such individuals to pass on their messages to other human beings, and hence other humans need to comprehend their language. In this project, a sign language detection or recognition web framework is proposed with the help of image processing. This application would help in recognizing Sign Language. The dataset used is the Sign Language dataset. This application could be used in schools or any place, which would make the communication process easier between the impaired and non-impaired people. The proposed method can be used for the ease of recognition of sign language. The method used is Deep Learning for image recognition and the data is trained using Random Forest. Using this method, we would recognize the gesture and predict which sign is shown

Introduction

I. INTRODUCTION

Communication is a fundamental aspect of human interaction, and for the deaf and hard-of-hearing community, sign language serves as a primary means of expression. Despite its importance, there exists a persistent challenge in facilitating effective communication between individuals who use sign language and those who do not. The conventional methods of bridging this communication gap, such as interpreters or and the of it written communication, are often insufficient or impractical in various situations. This limitation has prompted the exploration of technological solutions to empower the deaf and hard-of-hearing related community, fostering therefore inclusivity and independence in communication.

This challenge is further magnified by the diverse range of sign languages used globally, each with its own set of unique gestures and expressions. As a result, developing a reliable system capable of real-time sign language detection has become an essential need, with the potential to break down communication barriers and foster understanding. The emergence of computer vision and machine learning technologies offers a promising platform to address this challenge. By developing a system capable of recognizing and interpreting sign language gestures in real-time, we can create a tool that enhances communication accessibility for individuals who rely on sign language. We know the field of Machine Learning, Artificial Intelligence, Image Processing is advancing and can be used for multiple domains nowadays. In this paper, Deep Learning is used.

There are many factors that as taken into consideration when it comes to signing language recognition. The angle of the gesture also plays a very important role.

The type of dataset also plays a very vital role in the recognition model.

II. REFERENCE

|

RESEARCH PAPER |

AUTHOR |

Publication |

Remark |

|

|

[1] A Linguistic Feature Vector for the Visual Interpretation of Sign Language |

Hugo Pieter Wouda, Raymond Opdenakker |

Research Gate 2004 |

High classification rates achieved with "one-shot" training.

|

|

|

[2] Deaf-Mute Communication Interpreter |

Munirathnam Dhanalakshmi

|

Research Gate 2013 |

The device is designed to input values of gestures automatically for each user by. |

|

|

[3] The Leap Motion controller: A view on sign language |

Potter LE, Araullo J, Carter L

|

Griffith University 2013 |

Traditional gesture recognition systems have been developed for Indian sign language.

|

|

|

[4] Real Time Hand Gesture Recognition System: A Review |

Shaikh Shabnam, Dr. Shah Aqueel

|

IJCSN, ISSN 2015 |

Various approaches to recognize hand gesture.

|

|

|

[5] A Review Paper on Sign Language Recognition System For Deaf And Dumb People using Image Processing |

Manisha U. Kakde, Mahender G. Nakrani, Amit M. Rawate

|

IJERT 2016 |

Different techniques of sign language recognition based on sign acquiring methods and sign identification methods. |

|

|

[6] Real time two way communication approach for hearing impaired and dumb person based on image processing |

Rajesh Autee, Vitthal Bhosale

|

Research Gate 2016 |

Different image processing techniques and algorithms are used for feature extraction Using Image Processing |

|

|

[7] Face Recognition System |

Shivam Singh, Prof. S. Graceline Jasmine

|

IJERT 2019 |

Use of face recognition algorithms.

|

|

|

[8] Translation of Sign Language for Deaf and Dumb People |

Tamilselvan K.S, Suthagar S

|

Research Gate 2020

|

Aims to analyze and translate sign language hand gestures into text and voice.

|

|

|

[9] Sign Language Detection |

Pavitra Kadiyala

|

IRJET 2021

|

Classification to recognize sign language symbols (93.7%).

|

|

|

[10] Gesture Tool – Aiding Disabled Via AI |

Abhishek Kumar Saxena

|

ISSN 2021 |

Images of different gestures were taken in different light conditions (94%).

|

|

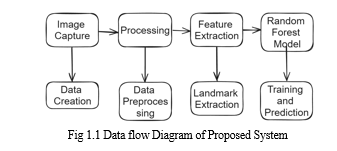

III. METHODOLOGY

Creating a signing language recognition system use Python involve several step and methodologies. Here an overlook of possible approach, particular tuned for Signal Language (SL) recognition:

- Data Collection: Gathering a range of videos or captures illustrating various sign in Signal Language. May needed cooperated with sign language professionals and indigenous signers creating data series.

- Datum Preprocessing: Preprocess the collecting datum to standardize the form, size, and quality of images or captures. This can include resizing, regularizing, noise diminishment, and background elimination.

- Characteristic Extraction: Extracting meaningful characteristics from the pre-processed data. Characteristic might have mitt signals, finger motions, mitt designs, and other pertinent Sign Language features.

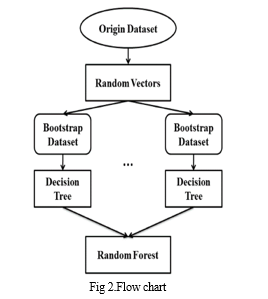

- Model Range: At random Forest Since this occurred a 26-class circumstance, we tried our happening's at Random Forest with HOG feature vector on the compressed captures. It turned a bit short of the Multiclass SVM with 46.45% 4-fold CV precision. Our explanation is that execution of resolution tree in python is monotheistic which may gotten to formation of rectangular zones whereas actual restrictions may not have been rectangularly.

- Model Train: Training the picked model using the pre-processed datum. This concerns splitting the data collection into training and validation groups, feeding the datum into the model, and optimizing the model parameters use methods like gradient wander.

- Model Assessment: Assess the instructed model's performance use metrics like accuracy, preciseness, call back, and F1-score. Checking the model on a isolated test set to rate its generality ability.

- Utilization: Bringing the trained model into a real-life utilization. This may have creating a easy interface, integrating with devices like cameras or sensors, and optimizing for real-time effectiveness.

- Continuous Improvement: Continue improve and upgrading the model base on feedback from users and ongoing datum collection. This may involves retraining the model with extra data, fine-tuning hyperparameter, or modernizing the model structures.

A. Proposed Model

Camera Input: The system beginning by captures video frames from a feed camera.

Preprocessing: Preprocessing involve converted the raw video frames in a format for analysis. Techniques like resizing, normalization, and reducing noise may be apply to enhance the quality of frames.

Hand Extraction: As our own features describing may not result in efficient, we start with SIFT(Scale Inverse Feature Transform) features as it computes the key points in the image which more apt than describing features manually. Thus, after the skin segmented images have transduced the YUV-YIQ model, we used the following methods to extract feature vectors.

Frame Transformation: The extracted hand region transform into a format suitable for input to the CNN model. This might involve resizing, cropping, and converting the hand region into a data structure suitable (e.g., arrays or tensors).

Gesture Prediction: Multiclass SVMs were tried on all the feature vectors. Results obtain with linear kernel and fourfold Cross Validated accuracies report for all feature vectors. The confusion matrices display in the sections results below correspond to the different techniques tried using linear kernel

Multi Class SVMs. The best accuracies were seen for this algorithm. Our attempt with 'rbf' kernel fail miserably on HOG feature vectors as only 4.76% accuracy was seen.

IV. REULTS AND DISCUSSIONS

The study involved training and evaluating three classifiers for sign language gesture detection. The Neural Network outperformed k-Nearest Neighbours and Random Forest with an accuracy of 90.91%. The KNN model achieved 82.43% accuracy, while the Random Forest achieved 75.67%. The superior performance of the Neural Network suggests it is the most suitable model for this task, balancing complexity and accuracy effectively. The best-performing model (Neural Network) was saved for real-time gesture recognition, demonstrating significant potential for practical applications in sign language translation systems.

The comparative analysis demonstrated that the Neural Network was the most effective, with an average accuracy of 80.95%, surpassing KNN and Random Forest, which had average accuracies of 82.39% and 75.30%, respectively, across 22 test runs. Ultimately, we achieved a peak accuracy of 93.26% on our test dataset using the Neural Network. Among all the preprocessing techniques tested, the combination of Rounding, Shifting, and Scaling proved to be the most efficient, consistently yielding high accuracies for all three algorithms. Consequently, a processing pipeline was devised to function as an SL identification system.

Conclusion

In this study, we explored the development of an American Sign Language (ASL) recognition system using the Random Forest classifier, alongside comparative analyses with k-Nearest Neighbors (kNN) and a proposed Neural Network model. Our findings highlighted the significant impact of preprocessing techniques on the accuracy of these models, with the Random Forest classifier achieving a notable performance boost from 77.43% to a maximum of 93.4%. The comprehensive experimentation with 28 different preprocessing methods demonstrated the importance of data preparation in enhancing model performance. Among these techniques, the combination of Rounding, Shifting, and Scaling emerged as the most effective, consistently improving accuracy across all three algorithms. This combination played a crucial role in optimizing the Random Forest classifier, making it a viable and competitive option for ASL recognition. While the Neural Network model outperformed both kNN and Random Forest in terms of average and maximum accuracy, the Random Forest classifier\'s performance underscores its potential as a robust and efficient model for ASL gesture recognition, particularly when preprocessing techniques are appropriately applied. The average accuracy of the Random Forest classifier over multiple runs (85.30%) reflects its stability and reliability as a classification model. In conclusion, the results of our study validate the effectiveness of the Random Forest classifier for ASL recognition, particularly with the right preprocessing pipeline. This research not only contributes to the body of knowledge in gesture recognition but also provides a foundation for developing practical, real-time ASL recognition systems that can facilitate better communication for individuals with hearing and speech impairments. Future work could explore further enhancements to preprocessing techniques and hybrid models to achieve even higher accuracies and more robust performance in diverse real-world scenarios.

References

[1] Richard Bowden, David Windridge, Timor Kadir, Andrew Zisserman, Michale Brady,” A linguistic Feature Vector for the Visual Interpretation of sign Language”, 8th European conference on computer vision, prague, czech Republic, proceedings , part 1, pp: 390- 396, 2004. [2] Rajamohan, Anbarasi Hemavathy, R.Dhanalakshmi, Munirathnam. (2013). Deaf-Mute Communication In- terpreter. International Journal of Scientific Engineering and Technology.2. 2277-1581. [3] Leigh Ellen Potter, Jake Araullo, and Lewis Carter. 2013. The Leap Motion controller: a view on sign language. In Proceedings of the 25th Australian Computer-Human Interaction Conference: Augmentation, Application, Innovation, Collaboration (OzCHI ’13). Association for Computing Machinery, New York,NY, USA, 175–178. DOI:https://doi.org/10.1145/2541016.2541072 [4] Real Time Hand Gesture Recognition System: A Review 1 Shaikh Shabnam, 2 Dr. Shah Aqueel [5] Kakde, Manisha Nakrani, Mahender Rawate, Amit. (2016). A Review Paper on Sign Language Recognition System For Deaf And Dumb People using Image Pro- cessing. International Journal of Engineering Research and. V5. 10.17577/IJERTV5IS031036. [6] Shinde, Shweta Autee, Rajesh Bhosale, Vitthal. (2016). Real time two way communication approach for hearing impaired and dumb person based on image processing. 1-5.10.1109/ICCIC.2016.7919572. [7] Shivam Singh , Prof. S. Graceline Jasmine, 2019, Face Recognition System, INTERNATIONALJOURNALOFENGINEERINGRESEARCH&TECHNOLOGY (IJERT) Volume 08, Issue 05 (May 2019), [8] Translation of Sign Language for Deaf and Dumb People by Suthagar S., K. S. Tamilselvan, P. Balakumar, B. Rajalakshmi, C. Roshini [9] SIGN LANGUAGE DETECTION Pavitra Kadiyala SCOPE, Vellore Institute of Technology, Vellore, India [10] Gesture Tool – Aiding Disabled Via AI Abhishek Kumar Saxena, Krish Shah, Mayank Soni, Shravani Vidhate Student, Dept. of Computer Science and Engineering, MIT School of Engineering, MIT ADT University, PuneMaharashtra, India. [11] Sunitha K. A, Anitha Saraswathi.P, Aarthi.M, Jayapriya. K, Lingam Sunny, “Deaf Mute Communication Interpreter- A Review”, International Journal of Applied Engineering Research, Volume 11, pp 290-296, 2016 [12] Mandeep Kaur Ahuja, Amardeep Singh, “Hand Gesture Recognition Using PCA”, International Journal of Computer Science Engineering and Technology (IJCSET), Volume 5, Issue 7, pp. 267-27, July 2015. [13] Chandandeep Kaur, Nivit Gill, “An Automated System for Indian Sign Language Recognition”, International Journal of Advanced Research in Computer Science and Software Engineering [14] Nakul Nagpal,Dr. Arun Mitra.,Dr. Pankaj Agrawal, “Design Issue and Proposed Implementation of Communication Aid for Deaf &Dumb People”, International Journal on Recent and Innovation Trends in Computing and Communication ,Volume: 3 Issue: 5,pp147 – 149 [15] A. Youssif, A. Aboutabl, and H. Ali, “Arabic sign language (ArSL) recognition system using HMM,” Int. J. Adv. Computer Sci. Appl., vol. 2, 2011. [16] P. Dreuw, D. Rybach, T. Deselaers, M. Zahedi, and H. ey, “Speech Recognition Techniques for Language

Copyright

Copyright © 2024 Divyanshu Pal , Asst. Professor Rohini Sharma, Dheeraj , Abdul Bazid, Gaurav Chandel . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63056

Publish Date : 2024-06-01

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online